Black Latents | Latent Diffusion is a gradio application that allows you to spawn audio items from Black Latents, a RAVE V2 VAE trained on the Black Plastics series using RAVE-Latent Diffusion models.

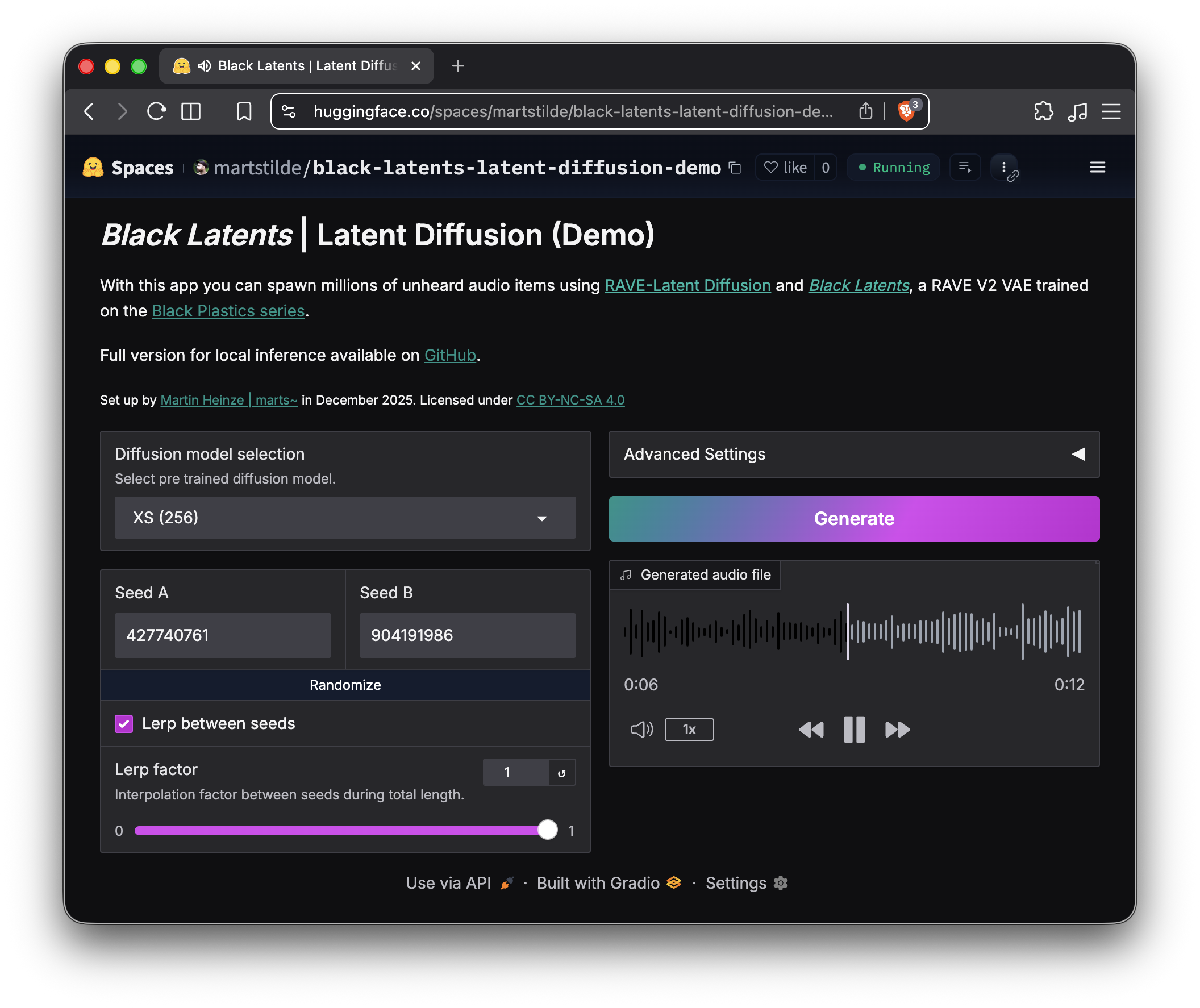

A demo version is accessible on Huggingface. The full application can be retrieved from GitHub to use in local inference.

Latent Diffusion with RAVE

The RAVE architecture makes timbre transfer on audio input possible, but you can also generate audio by using its decoder layer as a neural audio synthesizer, e.g. in Latent Jamming.

Another approach to use RAVE to spawn new audio information has been provided by Moisés Horta Valenzuela (aka 𝔥𝔢𝔵𝔬𝔯𝔠𝔦𝔰𝔪𝔬𝔰) with his RAVE-Latent Diffusion model.

Latent diffusion models in general are quite efficient since they operate on the highly compressed representations of the original data. The key idea of RAVE-Latent Diffusion is to replicate structural coherency of audio information by encoding (longer) audio sequences into their latent representations using a RAVE encoder and then train a denoising diffusion model on these embeddings. The trained model is able to unconditionally generate new and similar sequences of the same length which can be decoded back into the audio domain using the RAVE model’s decoder.

The original package by 𝔥𝔢𝔵𝔬𝔯𝔠𝔦𝔰𝔪𝔬𝔰 supports a latent embedding length down to a window size of 2048, which translates to about 95 seconds of audio at 44.1 KHz, suitable for compositional level information.

In my fork RAVE-Latent Diffusion (Flex’ed), I extended the code to support a minimum of 256, which equals about 12 seconds at 44.1 KHz, and implemented a few other improvements and additional training options.

Black Latents: turning Black Plastics into a RAVE model

The motivation to train Black Latents was to extract dominant characteristics from my Black Plastics series, a compilation of 7 EPs with a total of 28 audio tracks of genres Experimental Techno, Breakbeats and Drum & Bass, I released between 2012-2020.

I trained the model using the RAVE V2 architecture with a higher capacity of 128 and submitted it to the RAVE model challenge 2025 hosted by IRCAM, where it was publicly voted into first place. The model is available on the Forum IRCAM website.

Using Black Latents | Latent Diffusion to spawn audio

For Black Latents | Latent Diffusion, I trained diffusion models in 7 different configurations and context window lengths using once again the audio material from the Black Plastics series as base data set together with the Black Latents VAE.

The application itself is a simple gradio interface to the generate script of RAVE-Latent Diffusion (Flex’ed). In the UI, you can choose from the different diffusion models, define seeds and set additional parameters like temperature or latent normalization before generating audio items through the Black Latents model decoder.

Depending on the diffusion model and parameter selection, the resulting output varies from stumbling rhythmic micro structures to items with resemblances of their base training data’s macro scale considerations.

Other examples

I published earlier experiments with RAVE-Latent Diffusion and a different set of RAVE models in the form of two albums:

MARTSMÆN – RLDG_0da02c80cb [datamarts/2KOMMA4]: Bandcamp, Nina

MARTSM^N – RLDG_835770db1c [datamarts/2KOMMA3]: Bandcamp, Nina